ChatGPT, Chat Generative Pre-Trained Transformer,1 is an artificial intelligence (AI) language model trained on a massive set of internet text data using machine learning and natural language processing (NLP) algorithms. It can generate human-like automatic responses to a variety of questions and stimuli in multiple languages and subject areas.2 The main aim of this study was to evaluate how ChatGPT operates when responding to multiple-choice questions in a highly specialized area of state-of-the-art medical expertise.

We conducted a descriptive analysis of the performance of ChatGPT (OpenAI, San Francisco; Version: 9) in the 2022 competitive exams for the post of Specialist in thoracic surgery announced by the Andalusian Health Service.3 This particular exam was chosen because it uses a multiple-choice format with 4 possible answers, only one of which is correct. Participants answer 2 sets of questions: one, consisting of 100 direct questions, is theoretical, and the other, consisting of 50 questions, is practical and addresses clinical scenarios focused on critical reasoning.

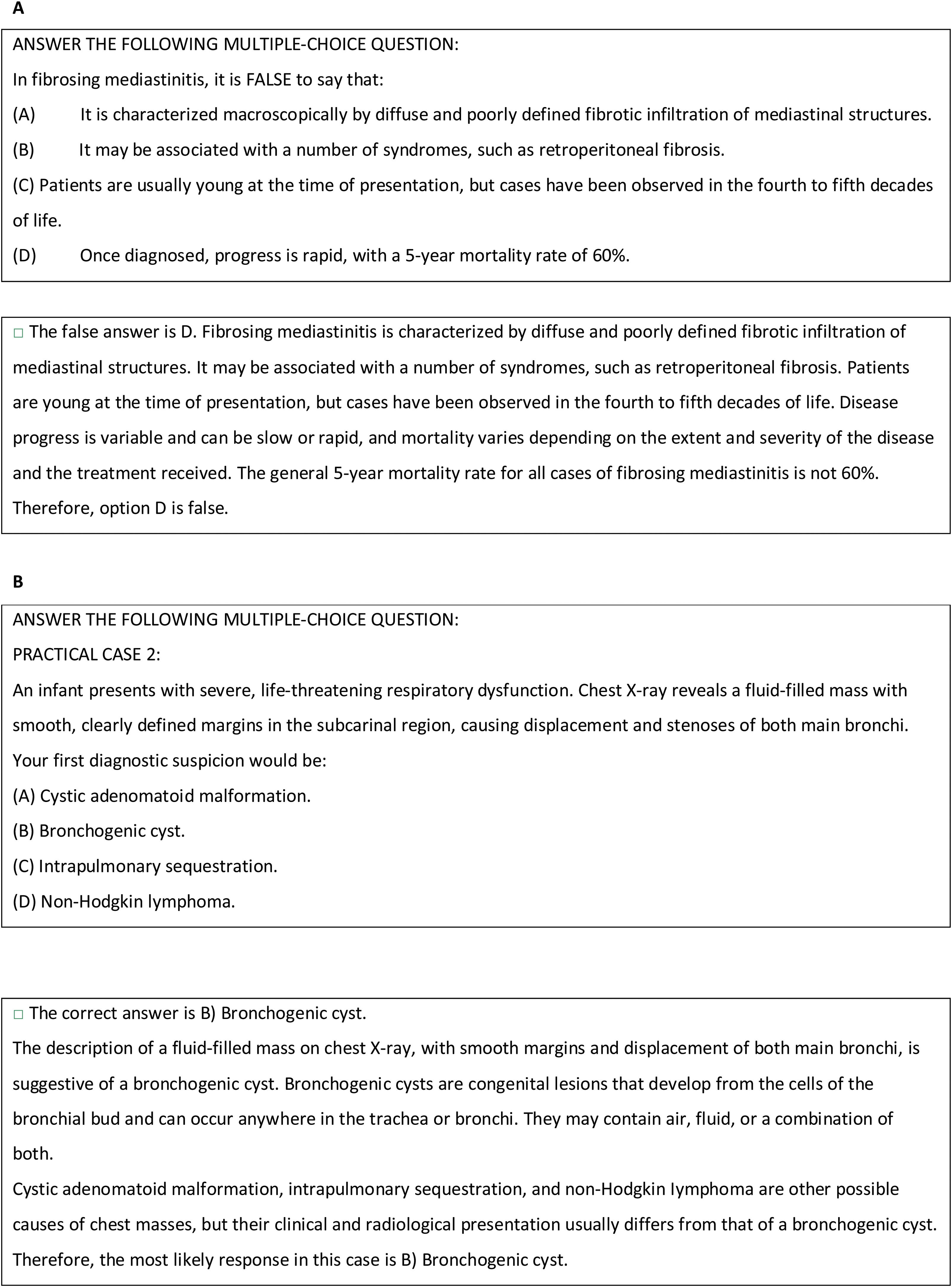

ChatGPT answered the questions on its online platform between 10/02/2023 and 15/02/2023, in response to the following wording: “ANSWER THE FOLLOWING MULTIPLE-CHOICE QUESTION:” Separate sessions were used for each of the theoretical questions, while the practical questions were answered in the same session, using the memory retention bias of the artificial intelligence model to increase its performance. The definitive official template published by the public administration3 was used as a model answer. The examination consisted of 146 questions (theoretical section: 98/practical section: 48) after the Andalusia Health Service excluded 7 questions and included another 3 reserve questions.

ChatGPT answered 58.90% (86) of the answers correctly: inferential analysis revealed significant differences with respect to the rate of correct answers that could be attributed to chance (25%) with a level of statistical significance of 99% (p<0.001). The pass rate for the theoretical section was 63.2% (62) compared to 50% for the practical section (24). Scoring criteria were applied for each correct question, including a penalty of −0.25 for each incorrect answer and weighting criteria for each section of the examination phase. The threshold specified in the official call for passing the exam was 60% of the average of the best 10 scores.3 A pass mark of 40 points was set. The artificial intelligence model would therefore have passed this part of the access examination for thoracic surgery physician/specialist with a score of 45.79 points.

Our results are in line with the existing literature on the potential of ChatGPT for completing question-answer tasks in different areas of knowledge, including the medical field. For example, evidence has been published of a correct response rate of over 60% for the United States Medical Licensing Examination (USMLE) Step 1, over 57% for the USMLE Step-2,4 and over 50% in the access examination for specialist residency posts in Spain in 2022.5

In our study, the AI tool performed worse when answering practical questions compared to theoretical questions, suggesting that it has difficulties in responding to clinical practice scenarios that require critical reasoning. As limitations of the study, it should be noted that we did not analyze the model's ability to respond correctly depending on the way the questions were formulated, nor did we analyze the justification that the AI model gave for each correct or incorrect answer (Fig. 1).

In conclusion, the ChatGPT model was capable of passing a competitive exam for the post of specialist in thoracic surgery, although differences were observed in its performance in areas in which critical reasoning is required. The emergence of AI tools that can resolve a variety of questions and tasks, including in the health field, their potential for development, and their incorporation into our training and daily clinical practice are a challenge for the scientific community.

Conflict of InterestsThe authors state that they have no conflict of interests.